All for one and one for all, united we stand divided we fall.

Alexandre Dumas, The Three Musketeers

I've been putting off attempting to write what I might call "

What I've learned about accessibility in twenty years" for a while - partly because even though it's based on a presentation and therefore already written (in a sense), copying PowerPoint slides as images is not very satisfying. Mostly, though, it's because it's likely to be a

really long post and those often tend to not perform well. Considering what I've seen in the industry recently though, it's maybe past the time I should have done it, either way, here it goes.

Before I seriously dive into this topic, I want to share a little information about myself. Over the past (almost) two decades I've worked with accessibility in both the public sector, where I was bound by Section 508 of the Rehabilitation Act (1973), and in the private sector, where I've worked with guidelines that are now published as the Web Content Accessibility Guidelines (or WCAG). Over that time I've not only built a significant amount of what we might call "product knowledge" about accessibility, but have built quite a bit of passion for the work as well. I'm going to attempt to share that passion and attempt to convince you to become what I call an "Acessibility Ally" (A11y*ally, A11y^2, or "Ally Squared") - someone who is actively supportive of a11y, or web accessibility.

What Is This Accessibility Stuff, Anyway?

A lot of discussions about interface accessibility start with impairment. They talk about permanent, temporary, and situational impairment. They talk about visual, auditory, speech, and motor impairment (and sometimes throw in neurological and cognitive impairment as well). They'll give you the numbers...how in the US, across all ages,

- approximately 8 percent of individuals report significant auditory impairment (about 4 percent are "functionally deaf" and about 4 percent are "hard of hearing", meaning they have difficulty hearing with assistance)

- approximately 4 percent of individuals report low or no vision (which are not the only visual impairments)

- approximately 8 percent of men (and nearly no women) report one of several conditions we refer to as "colorblindness"

- nearly 7 percent of individuals experience a severe motor or dexterity difficulty

- nearly 17 percent of individuals experience a neurological disorder

- nearly 20 percent of individuals experience cognitive difficulties

They might even tell you how all those numbers are really First World numbers and when you go into emerging markets where reliable access to resources is more limited the numbers

double. They'll talk about how, in general, those numbers are skewed by age and how about 10 percent of people under the age of 65 report impairment while

more than 25 percent of people between the ages of 65 and 74 report impairment (and nearly

50 percent of those 75 and older report impairment).

I don't generally like to start there...though I guess I just did. Accessibility is not about the user's impairment - or at least it

shouldn't be - it's about the obstacles we - the product managers, content writers, designers, and engineers - place in the path of people trying to live their lives. Talking about impairment in numbers like this also tends to give the impression that impairment is not "normal" when the data clearly shows otherwise. Even accounting for a degree of comorbidity, the numbers indicate that

most people experience some sort of impairment in their daily lives.

The other approach that's often taken is diving directly into accessibility and what I call impairment categories and their respective "solution". The major problem here is a risk similar to what engineers typically refer to as "early optimization". The "solutions" for visual and auditory and even motor impairments are relatively easy from an engineering point of view, even though neurological and cognitive difficulties are far more significant in terms of numbers. Rather than focus on which impairment falls into the four categories that define accessibility - Perceivable, Operable, Understandable, and Robust - we have to, as I like to say, see the forest

and the trees. While there is benefit in being familiar with the Success Criteria in each of the Guidelines within the WCAG, using that as a focus will miss a large portion of the experience.

One other reason I have chosen this broader understanding of accessibility is that

accessibility in interfaces is holistic.

Everything in the interface - everything in a web page and every web page in a process - must be accessible in order to meet the definition of accessible. For example, we can't claim a web page that meets visual guidelines but not auditory guidelines "accessible", and if the form on our page is accessible but the navigation is not then the page is not accessible.

Why is Accessibility Important?

When considering accessibility, I often recall an experience in interviewing a candidate for an engineer position and relate that story to those listening. This candidate, when asked about accessibility, responded something along the lines of "do you mean blind people - they can't see web pages anyway". I've also worked with designers and product managers who have complained about the amount of time spent building accessibility interfaces for such a "small" group of users or flat out said accessibility isn't a priority. I've worked with content writers who are convinced their writing is clear enough for their intended audience and anyone confused by it is not in their intended audience - what I call the Abercrombe and Fitch Content Model.

For those who consider accessibility important, there are a few different approaches we might typically take when trying to sway those who tend be less inclined to consider the importance of accessibility. In my experience, the least frequently made argument for the importance of accessibility is one of a

moral imperative - making an interface accessible is the "right thing to do". While I agree, I won't argue that point here, simply because it's the least frequently made argument and this is going to be a post that bounds the too-long length as is.

The approach people most frequently take in attempting to convince others accessibility is important is the

anti-litigation approach. Making sure their interface is accessible is promoted as a matter of organizational security - a form of self-protection. In this approach, the typical method is a focus on the Success Criteria of the WCAG Recommendation alongside automated testing to verify that they have achieved A or AA level compliance. The "anti-litigation" approach is a pathway to organizational failure.

Make no mistake, the risk of litigation is significant. In the US, litigation in Federal court has increased approximately 400 percent year-over-year between 2015 and 2017, and at the time of this writing appears to be growing at roughly the same rate in 2018. Even more significant, cases have held third parties accountable and have progressed even when remediation was in progress, indicating the court is at least sometimes willing to consider a wider scope than we might typically think of in relation to these cases. To make matters even more precarious, businesses operating internationally face a range of penalties and enforcement patterns. Nearly all countries have some degree of statutory regulation regarding accessibility, even if enforcement and penalties vary. Thankfully, the international landscape is not nearly as varied as it was, as

nearly all regulations follow the WCAG or are a derivative of those guidelines.

So, why, when the threat of litigation both domestically and internationally is so significant, do I say focus on the Success Criteria is a pathway to failure? My experience has

repeatedly shown that even if all Success Criteria are met, an interface may not be accessible - an issue I'll go into a little further when I talk about building and testing interfaces - and

only truly accessible interfaces allow us to succeed.

What happens when your interface is not accessible - aside from the litigation already discussed? First, it's extremely unlikely that you'll know your interface has accessibility issues, because 9 of 10 individuals who experience an accessibility issue don't report it.

Your analytics will not identify those failing to convert due to accessibility issues - they'll be mixed in with any others you're tracking. Second, those abandoned transactions will be costly in the extreme. In the UK, those abandoning transactions because of accessibility issues account for roughly £12 billion (GBP) annually - which is roughly 10 percent of the total market. Let me say that again because it deserves to be emphasized -

those abandoning because of accessibility issues represent roughly 10 percent of the total market - not 10 percent of your market share - 10 percent of the total market.

Whether your idea of success is moral superiority, ubiquity, or piles of cash, the only sure way to that end is a pathway of accessibility.

How Do We Become an Accessibility Ally?

Hearing "it's the right thing to do" or "this is how we can get into more homes" or, sometimes the £12 billion (GBP) number - one of those often convinces people to become at least a little interested in creating accessible interfaces, even if they're not quite to the point of wanting to become an Accessibility Evangelist. The good news is that even something as simple as making creating accessible interface a priority can make you an Accessibility Ally.

The question then becomes how to we take that first step - how do we create accessible interfaces. The first rule you have to know about creating an accessible interface is that it takes the entire team. Accessibility exists at every level - the complexity of processes (one of the leading causes of abandonment), the content in the interface, the visual design and interactions, and how all of that is put together in code by the engineers - all of it impacts accessibility.

At this point, I should give fair warning that although I'll try to touch on all the layers of an interface, my strengths are in engineering, so the Building and Testing Interfaces section may seem weighted a little heavier, even though it should not be considered more important.

Designing for Accessibility

If we were building a house we wanted to be accessible, we recognize that we would have to start at the beginning, even before blueprints are drawn, making decisions about how many levels it will have, where it will be located, and how people will approach it. Once those high-level decisions are made, we might start drawing blueprints - laying out the rooms and making sure that doorways and passages have sufficient space. We would alter design elements like cabinet and counter height and perhaps flooring surfaces that pose fewer navigation difficulties. To remodel a house to make it accessible, while not impossible, is often very difficult...and the same concepts apply to building interfaces.

Most projects that strive to be fully accessible start with Information Architecture, or IA (you can find out more about IA at

https://www.usability.gov/what-and-why/information-architecture.html). This is generally a good place to begin, unless what you're building is an interface for a process - like buying or selling something or signing up for something. In the case of a process interface, you've basically decided you're building a house with multiple levels and you have accessibility issues related to traversing those levels...to continue our simile, you have to decide if you're going to have an elevator or a device that traverses stairs...but your building will still need a foundation. Information Architecture is like the foundation of your building. Can you build a building without a foundation? Sure. A lot of pioneers built log cabins by laying the first course of logs directly on the ground...but -

and this is a very big but - those structures did not last.

If you decide to go another route than good IA, the work further on will be more difficult, and much of it will have to be reworked, because IA affects a core aspect of the Accessibility Tree - the accessible name - the most critical piece of information assistive technology can have about an element of an interface.

Once your Information Architecture is complete, designing for accessibility is considerably less complex than most people imagine it to be. Sure there are some technical bits that designers have to keep in mind - like luminance contrast and how everything needs a label - but there are loads of good, reliable resources available...probably more so for design than for the engineering side of things. For example, there are several resources available from the

Paciello Group and

Deque, organizations who work with web accessibility almost exclusively, as well as both public and private organizations who have made accessibility a priority, like

Government Digital Service,

eBay,

PayPal, and even

A List Apart.

With the available resources you can succeed as an Accessibility Ally as long as you keep one thought at the fore of your mind - can someone use this interface the way they want rather than the way I want. What if they search a list of all the links on your site - does the text inside the anchor tell them what's on the other side? What if they're experienced users and want to jump past all the stuff you've crammed into the header but they're not using a scrollbar - is there something that tells them how to do that? Keep in mind that as a designer, you're designing the interface for everyone, not just those who can [insert action here] like you can.

Building and Testing Interfaces

When building accessible interfaces, there is a massive amount to learn about the Accessibility Tree and how and when to modify it as well as the different states a component might have. Much has been made of ARIA roles and states, but frankly, ARIA is one of the worst (or perhaps I might say most dangerous) tools an engineer can use.

We're going to briefly pause the technical stuff here for a short story from my childhood (a story I'll tie to ARIA, but not till the end).

When I was a child - about 8 years old - my family and I visited a gift shop while on vacation in Appalachia. In this particular gift shop they sold something that my 8 year old mind thought was the greatest thing a kid could have - a bullwhip. I begged and pleaded, but my parents would not allow me to purchase this wondrous device that smelled of leather, power, and danger. I was very dismayed...until, as we were leaving, I saw a child about my age flicking one and listening to the distinctive crack...until he snapped it behind his back and stood up ramrod straight as a look of intense pain crossed his face.

ARIA roles and states are like that bullwhip. They look really cool. You're pretty sure you would look like a superhero with them coiled on your belt. They smell of power and danger and when other engineers see you use them, you're pretty sure they think you are a superhero. They're very enticing...until they crack across your back.

Luckily, ARIA roles and states are almost unnecessary. Yes, they can take your interface to the next level, but they are not for the inexperienced or those who lack caution. If you're creating interfaces designed for a browser, the best tool you have to build an accessible interface is Semantic HTML. Yes, it's possible to build an interface totally lacking accessibility in native HTML. Yes, it's possible to build an accessible interface in Semantic HTML and then destroy the accessibility with CSS. Yes, it's possible to build an accessible interface with JavaScript or to destroy an accessible interface with JavaScript. None of the languages we use in front-end engineering build accessibility or destroy accessibility on their own - that takes engineers. The languages themselves are strong enough...if you are new to accessibility, start somewhere other than the bullwhip.

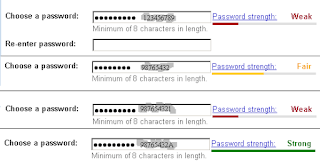

The next topic most people jump to from here is how to test an interface to make sure it is accessible. This is another place where things can get tricky, because there are a number of different tools, they all serve a different purpose, and they may not do what they're expected to do. For instance, there are tools that measure things like luminance contrast, whether or not landmarks are present, or if any required elements or attributes are missing - validating according to the Success Criteria in the WCAG. In this realm, I prefer the aXe Chrome plug-in (by Deque). Nearly all these tools are equally good at what they do, but - and here's one of the places where it can go sideways - tools that validate according to the Success Criteria are a bit like spellcheckers - they can tell you if you spelled the word correctly, but they cannot tell you if you've selected the correct word.

Beyond Success Criteria validation, there are other tools available (or soon to be available) to help verify accessibility, the most common of which are screen readers. Of screen readers available, some are free and some are paid - VoiceOver on Mac and JAWS on Windows are the most popular in the US - JAWS is not free, but there is a demo version you can run for about 40 minutes at a time. NVDA (another Windows tool) and ChromeVox are free, but less popular. In addition to screen readers, in version 61 of Firefox the dev tools should include a tool that gives visibility into the Accessibility Tree (version 61 is the planned release, this version is not available at the time of this writing).

One thing to remember with any of these - just because it works one way for you doesn't mean it will work that way for everyone. Accessibility platforms are multiple tools that share an interface. Each tool is built differently - typically according to the senior engineer's interpretation of the specification. While the results are often very similar, they will not always be the same. For example, some platforms that include VoiceOver don't recognize a dynamically modified Accessibility Tree, meaning if you add an element to the DOM it won't be announced, or it may only be announced if certain steps are taken, and the exact same code running in JAWS will announce the content multiple times. Another thing to remember is that there is no way you will ever known all the edge cases - in the case VoiceOver not recognizing dynamically added elements mentioned previously, it took more effort than it should have to demonstrate conclusively to the stakeholders the issue was in a difference in the platform.

Finally, when you're trying to ensure your interface is accessible, you will have to manually test it - there is simply no other way - and it should be tested at least once every development cycle. Granted, not every user story will affect accessibility, but because have that holistic view of accessibility that acknowledges that accessibility exists at every level, we know that most stories will affect accessibility.

As with design, there are resources available, but good resources are more difficult to find because engineers are opinionated and usually feel like they understand specifications, even though what they understand is their interpretation of the specifications. If you want to become an accessibility expert, it can be done, but the process is neither quick nor easy. If you want to become an A11y^2, well that process is quicker and easier and mostly consists of keeping everything said in this section in mind. Understand accessibility holistically. Make "Semantic HTML" and "ARIA is a last resort" your mantras. Check your work with one of the WCAG verification tools (again, I prefer the aXe Chrome plug-in) and at least one screen reader. Check it manually, and check it frequently.

Being an Accessibility Ally

Being an Accessibility Ally is really not complicated. You don't need to be an accessibility expert (though you certainly can be one if you want)...you just need to see accessibility as a priority and the pathway to success. Being an Accessibility Ally means you're actively supportive of accessibility.

To be actively supportive, one needs to understand accessibility in a more holistic way than we've traditionally thought about it and we need to understand that not only does accessibility accumulate, its opposite accumulates as well. In other words, inaccessibility anywhere is a threat to accessibility everywhere.

To be actively supportive, we need to do more than act the part by designing and building things like stair-ramps with ramps too steep to safely navigate with a wheelchair or Norman doors. We need to make building interfaces that are perceivable, operable, understandable, and robust a priority...and we need to make that priority visible to others.

When we're actively supportive and people see our action, only then will we be the ally we all need...and we all need all the allies we can get.

For another take on age and web interfaces, you may want to take a look at "The Danger of an Adult-oriented Internet", a post in this blog from 2013 or A11y Squared, a post from 2017.